|

by Chantel McGee

May 21, 2017

from

CNBC Website

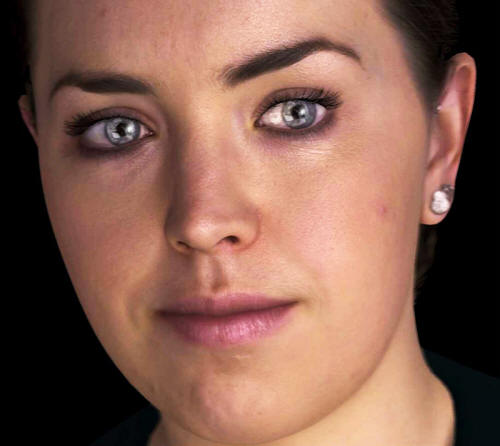

Mark Sagar showing

facial

emotion recognition technology

with

Baby X

Source: Soul Machines

The man who built a

virtual nervous system

explains how humans will

interact with machines

in ten years.

Mark Sagar invented a virtual nervous system

that powers autonomous animated

avatars.

He is best known for developing Baby X,

a virtual infant that learns

through experience.

Sagar says people will learn how to work cooperatively

with AI powered robots.

In ten years artificially intelligent robots will be living and

working with us, according to Dr. Mark Sagar, CEO of

Soul Machines, an Auckland, New

Zealand-based company that develops intelligent, emotionally

responsive avatars.

Sagar, an AI engineer, is the inventor of a virtual nervous system

that powers autonomous animated avatars like

Baby X - a virtual infant that

learns through experience and can "feel" emotions.

"We are creating

realistic adult avatars serving as virtual assistants. You can

use them to plug into existing systems like IBM Watson or

Cortana - putting a face on a chatbot," said Sagar.

Within a decade humans

will be interacting with lifelike emotionally-responsive AI robots,

very similar to the premise of the the HBO hit series Westworld,

said Sagar.

But before that scenario becomes a reality robotics will have to

catch up to AI technology.

"Robotics technology

is not really at the level of control that's required," he said.

The biological models

Sagar has developed are building blocks for experimentation.

"We have been working

on the deepest aspect of the technology - biologically-inspired

cognitive architectures. Simplified models of the brain," he

said.

At the core of the

technology, are virtual neurotransmitters that can simulate human

hormones like dopamine, serotonin and oxytocin.

With computer graphics, Sagar says he can easily develop virtual

humans that can simulate natural movements like a smirk or blinking

of the eyes, which is not as easy to replicate with robots.

"Robotics materials

will have to get to the point where we can start creating

realistic simulations. The cost of doing that is really high,"

said Sagar.

"Creating a robotic

owl for example would take half a year or something. The

economics are quite different from computer graphics," said

Sagar.

In about five years,

Sagar says the system he has created could be used to power virtual

reality games.

"We want to create VR

experiences where users can freely move through a world and the

characters start to have a life of their own," he said.

"Once you put your AR

or VR glasses on, you will have this alternate populated world

of fantastic things that people haven't even imagined yet," said

Sagar.

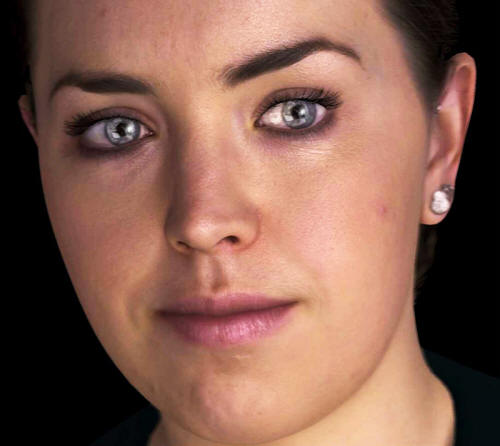

Nadia, a virtual avatar created as part of

the

National Disability Insurance Scheme

for the

Australian government.

Source: Soul Machines

The company recently introduced Nadia, a virtual assistant

that helps people navigate information as part of the National

Disability Insurance Scheme (NDIS)

in Australia. (Nadia's voice is that of actress

Cate Blanchett.)

"By adding voice

you're able to increase the dimensions of communication," he

said.

The NDIS developed Nadia

to help provide support for its disabled participants.

"The Australian

government is taking away the boring, painful things like

repeated questions, a computer can do that and then the humans

can respond to the more complex or more important questions.

Freeing them up from mundane tasks," said Sagar.

His goal is to shift the

way humans communicate and interact with machines.

"We are creating an

interactive loop between the user and the computer. A real-time

feedback loop in face-to-face interactions. If [the avatar]

isn't understanding, it can express that," he said.

Nadia, like

Baby X, can see through a computer's camera and hear through its

speakers.

"Because it can see,

you can show it things, which starts mixing the real world and

the virtual world," he said.

Nadia's ability to

express emotions and read the expressions of others is an essential

part of the user experience, according to Sagar.

"We create

intelligent and emotionally aware interactions between people

and machines," he said.

Sagar and his team are

looking at other possible applications of the technology including

medical education, psychological research and children's characters

that can be used for edutainment.

"It could become a

platform for which [animation studios] could build all types of

different characters," said Sagar. "It can also be [used] to

create characters for medical simulation or training," he said.

There is already a shift

in the way humans interact with machines, he said.

"We'll see increasing

use of [AI] in lots of types of jobs to enhance people's

abilities," he said.

And if we want to know

what the future relationship between humans and machines will look

like, he says, look no further than our own daily interactions with

other humans for clues.

"Humans cooperating

together is basically what has created all technology and art

and all kinds of things," said Sagar. "When we start adding on

to that the creative possibilities of machines, it radically

expands," he said.

|