|

from

ThriveGlobal Website

they're better at judging information than know-it-alls.

According to new research (Cognitive and Interpersonal Features of Intellectual Humility) out of Duke University, the grip you keep on your ideas shapes the way you approach the world. How tightly (or not) you cling to your own opinions is called "intellectual humility" (IH), and it could have a big effect on the decisions you make.

People high in IH are less committed to being right about everything and care more about what new information is out in the world, while people low in IH want to believe what they already think.

If you agree with the statement,

Call it informational sensitivity:

The ramifications are broad, Mark Leary says, since needing to be right all the time and ignoring evidence that conflicts with your opinion can create all sorts of problems, whether in relationships or business or politics.

Here's one politically relevant example from the paper:

To Leary, the results speak poorly of the American ideal of "sticking to your guns," since that's the opposite of the open- and eager-mindedness that IH characterizes.

Self-worth and identity issues might be in the mix, too.

With IH, it's less about being right than seeing what's right.

Troublingly, other research (Powerful People Think Differently about Their Thoughts) indicates that powerful people take their thoughts more seriously, suggesting that IH might go down the higher you climb in social structures.

IH may arise from other, more fundamental factors of personality, too.

In one experiment, Leary found that people high in IH also had high openness to experience, a core personality trait measuring curiosity, and need for cognition, or how much you enjoy thinking.

Leary reasons that IH arises from those drives working together, since desiring new ideas and chewing them over has a way of getting you accustomed to changing your mind.

As indicated in another experiment, IH also depends your ability spot facts. To assess this, the researchers recruited 400 people online for a critical thinking task evaluating the merits of flossing.

After reporting how regularly they flossed, participants read one of two essays advocating the practice - one relied on strong, scientific arguments citing dental experts, the other on weak, anecdotal arguments from ordinary people.

The results:

The question I really wanted to ask Leary was outside the scope of the study, namely:

After telling me that's a question for another paper, he did offer a tip from his lectures.

When he's teaching this stuff, he likes to check his students' intellectual privilege with a couple of well-placed questions.

...Think

Differently about Their Thoughts October 27, 2016 from NYMag Website

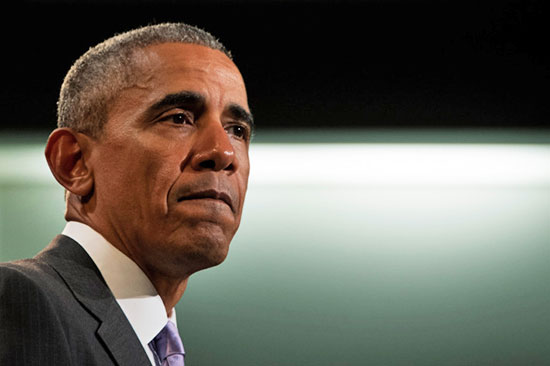

Photo: Jim Watson

Being in power does, in a very real sense, go to people's heads...

Psychologists have found that when people are made to feel powerful, they believe more in the things they're thinking.

This leads to a bunch of wacky, seemingly contradictory behaviors, as Ohio State Ph.D. candidate Geoff Durso explained to Science of Us in an email:

This is explained by the "self-validation theory of judgment," he says, which basically means that when you feel powerful, your thoughts get magnified.

They feel more right compared to if you felt powerless.

And this is where things get even weirder:

For a study (From Power to Inaction - Ambivalence Gives Pause to the Powerful) published this month in Psychological Science, Durso and his colleagues recruited 129 and 197 college students for two separate experiments.

Participants were given different descriptions of an employee named Bob, some with all positive attributes (like that Bob beat his earnings goals), some with all negative (e.g., Bob stole his colleague's mug from the kitchen), and some with an even split.

Then, the participants were given a writing task where they had to recall an experience in their lives that made them feel powerful or powerless, framing their decision.

They were also asked how conflicted they felt about Bob's future, and in one study, they were asked to decide whether to fire or promote Bob with the click of a mouse. Of the participants who were given ambivalent information about his behavior, the powerful took 16 percent longer to make a decision than the less powerful.

Just as power made people kinder or more dishonest when they were primed for it in the other experiments, it also made them think longer about conflicting information.

It's a finding that is easy to spot corollaries for out in the wild.

Barack Obama, who largely makes 'good' decisions, has spoken about the burden of power and conflicting information.

In an interview with U.S. News in 2009, Obama said that one of the difficulties of his job was that if a problem were to have a clear solution, it wouldn't land on his desk; the buck wouldn't stop there.

When asked about difficult economic decisions, he said that there's always going to be probabilities involved.

Same with George W. Bush, as the research team notes in their press release:

It's all evidence that the more powerful you feel, the higher the stakes are - even in your head...

|