by nef

The New Economics Foundation

25 January 2010

from

NewEconomics Website

|

NEF is an independent

think-and-do tank that inspires and demonstrates real economic

well-being.

We aim to improve quality of life by promoting innovative solutions

that challenge mainstream thinking on economic, environmental and

social issues. We work in partnership and put people and the planet

first.

NEF (the new economics foundation) is a registered charity founded

in 1986 by the leaders of The Other Economic Summit (TOES), which

forced issues such as international debt onto the agenda of the G8

summit meetings. It has taken a lead in helping establish new

coalitions and organizations such as the Jubilee 2000 debt campaign;

the Ethical Trading Initiative; the UK Social Investment Forum; and

new ways to measure social and economic well-being.

Schumacher College is the UK’s international green college. We are

delighted to be partnering with NEF in publishing Growth isn't

possible: Why we need a new economic direction. As with NEF,

Schumacher College owes its inspiration to the work of the radical

economist and development educator Fritz Schumacher. For twenty

years we have been running a wide range of short courses attracting

the world's leading thinkers on new economics.

Over the past two

years we have hosted two think tanks around sustainable economics

and intelligent growth, leading to a declaration on sustainable

economics, the 'E4 Declaration' and our strong support for the Green

New Deal. This year we took the decision to develop with help from NEF colleagues a post-graduate MSc course in New, or 'Transition'

Economics, the first in the country.

We will also be opening a

second campus in 2011 focusing upon the 'great re-skilling', with

the provision of a range of courses on sustainable food and farming,

renewal energy, eco design and sustainable business development,

together with launching an E Learning program.

For further

information on the courses available at Schumacher College please

see our website

www.schumachercollege.org.uk.

Schumacher College is an

initiative of The Dartington Hall Trust. |

Contents

-

Greenhouse gas emissions and current climate change

-

Scenarios of

growth and emission reductions

-

Peak Oil, Gas and Coal?

-

Carbon capture and storage

- The nuclear fusion of the

2010s?

-

The limits to nuclear

-

The hydrogen economy

-

Biofuels

-

Geoengineering

- Technological saviour or damaging distraction?

-

How much can

energy efficiency really improve?

-

Equity considerations

-

If not the

economics of global growth, then what?

- Getting an economy the

right size for the planet

Foreword

If you spend your time thinking that the most important objective of public

policy is to get growth up from 1.9 per cent to 2 per cent and even better

2.1 per cent we’re pursuing a sort of false god there. We’re pursuing it

first of all because if we accept that, we will do things to the climate

that will be harmful, but also because all the evidence shows that beyond

the sort of standard of living which Britain has now achieved, extra growth

does not automatically translate into human welfare and happiness.

Lord Adair Turner1 Chair of the UK Financial Services Authority

Anyone who believes exponential growth can go on forever in a finite world

is either a madman or an economist.

Kenneth E. Boulding Economist and co-founder of General Systems Theory

In January 2006, nef (the new economics foundation) published the report

Growth isn’t working.2 It highlighted a flaw at the heart of the general

economic strategy that relies upon global economic growth to reduce poverty.

The distribution of costs and benefits from economic growth, it

demonstrated, are highly unbalanced. The share of benefits reaching those on

the lowest incomes was shrinking. In this system, paradoxically, in order to

generate ever smaller benefits for the poorest, it requires those who are

already rich and ‘over-consuming’ to consume ever more.

The unavoidable result under business as usual in the global economy is

that, long before any general and meaningful reduction in poverty has been

won, the very life-support systems that we all rely on are almost certain to

have been fundamentally compromised.

Four years on from Growth isn’t working, this new publication, Growth isn’t

possible goes one step further and tests that thesis in detail in the

context of climate change and energy. It argues that indefinite global

economic growth is unsustainable. Just as the laws of thermodynamics

constrain the maximum efficiency of a heat engine, economic growth is

constrained by the finite nature of our planet’s natural resources (biocapacity).

As economist Herman Daly once commented, he would accept the possibility of

infinite growth in the economy on the day that one of his economist

colleagues could demonstrate that Earth itself could grow at a commensurate

rate.3

Whether or not the stumbling international negotiations on climate change

improve, our findings make clear that much more will be needed than simply

more ambitious reductions in greenhouse gas emissions.

This report concludes

that a new macro economic model is needed, one that allows the human

population as a whole to thrive without having to relying on ultimately

impossible, endless increases in consumption.

Andrew Simms

Victoria Johnson

January 2010

Back to Contents

Introduction

We really have to come up with new metrics and new measures by which we look

at economic welfare in a much larger context than just measuring GDP, which

I think is proving to be an extremely harmful way of measuring economic

progress.

R K Pachauri Ph.D,4

Chairman, Intergovernmental Panel on Climate Change

Director-General, The Energy and Resources Institute, Director, Yale Climate

and Energy Institute

Towards what ultimate point is society tending by its industrial progress?

When the progress ceases, in what condition are we to expect that it will

leave mankind?

John Stuart Mill (1848) 5

From birth to puberty a hamster doubles its weight each week. If, then,

instead of levelling-off in maturity as animals do, the hamster continued to

double its weight each week, on its first birthday we would be facing a nine

billion tonne hamster. If it kept eating at the same ratio of food to body

weight, by then its daily intake would be greater than the total, annual

amount of maize produced worldwide.6

There is a reason that in nature things

do not grow indefinitely.

The American economist Herman Daly argues that growth’s first, literal

dictionary definition is,

‘…to spring up and develop to maturity. Thus the

very notion of growth includes some concept of maturity or sufficiency,

beyond which point physical accumulation gives way to physical

maintenance’.7

In other words, development continues but growth gives way to

a state of dynamic equilibrium - the rate of inputs are equal to the rate of

outputs so the composition of the system is unchanging in time.8

For

example, a bath would be in dynamic equilibrium if water flowing in from the

tap escapes down the plughole at the same rate. This means the total amount

of water in the bath does not change, despite being in a constant state of

flux.

In January 2006, nef (the new economics foundation) published the report

Growth isn’t working.9

It highlighted a flaw at the heart of the economic

strategy that relies overwhelmingly upon economic growth to reduce poverty.

The distribution of costs and benefits from global economic growth, it

demonstrated, are highly unbalanced. The share of benefits reaching those on

the lowest incomes was shrinking. In this system, paradoxically, in order to

generate ever smaller benefits for the poorest, it requires those who are

already rich and ‘over-consuming’ to consume ever more.

The unavoidable result, the report points out, is that, with business as

usual in the global economy, long before any general and meaningful

reduction in poverty has been won, the very life-support systems we all rely

on are likely to have been fundamentally compromised.

Four years on from Growth isn’t working, Growth isn’t possible goes one step

further and tests that thesis in detail in the context of climate change and

energy. It argues that indefinite global economic growth is unsustainable.

Just as the laws of thermodynamics constrain the maximum efficiency of a

heat engine, economic growth is constrained by the finite nature of our

planet’s natural resources (biocapacity).

As Daly once commented, he would

accept the possibility of infinite growth in the economy on the day that one

of his economist colleagues could demonstrate that Earth itself could grow

at a commensurate rate.10

The most recent data on human use of biocapacity sends a number of

unfortunate signals for believers in the possibility of unrestrained growth.

Our global ecological footprint is growing, further overshooting what the

biosphere can provide and absorb, and in the process, like two trains

heading in opposite directions, we appear to be actually shrinking the

available biocapacity on which we depend.

Globally we are consuming nature’s services - using resources and creating

carbon emissions - 44 per cent faster than nature can regenerate and

reabsorb what we consume and the waste we produce. In other words, it takes

the Earth almost 18 months to produce the ecological services that humanity

uses in one year.

The UK’s footprint has grown such that if the whole world

wished to consume at the same rate it would require 3.4 planets like

Earth.11

Growth forever, as conventionally defined (see Box 1), within fixed, though

flexible, limits isn’t possible. Sooner or later we will hit the biosphere’s

buffers. This happens for one of two reasons. Either a natural resource

becomes over-exploited to the point of exhaustion, or because more waste is

dumped into an ecosystem than can be safely absorbed, leading to dysfunction

or collapse. Science now seems to be telling us that both are happening, and

sooner, rather than later.

Yet, for decades, it has been a heresy punishable by career suicide for

economists (or politicians) to question orthodox economic growth.

As the

British MP Colin Challen quipped in 2006,

‘We are imprisoned by our

political Hippocratic oath: we will deliver unto the electorate more goodies

than anyone else.’12

|

Box 1: What is growth?

The question is deceptive,

because the word has many applications. They range from the

description of biological processes to more abstract notions of

personal development. But, when used to describe the economy, growth

has a very specific meaning. This often causes confusion.

Growth tends to be used synonymously with all things that are good.

Plants grow, children grow, how could that be bad? But, of course,

even in nature, growth can be malign, as in the case of cancer

cells.

In economics ‘growth’, or the lack of it, describes the trajectory

of Gross Domestic Product and Gross National Product, two slightly

different measures of national income (they differ, basically, only

in that one includes earnings from overseas assets). The value of

imports is deducted and the value of exports added.

Hence, an economy is said to be growing if the financial value of

all the exchanges of goods and services within it goes up. The

absence of growth gets described, pejoratively, as recession.

Prolonged recessions are called depressions.

Yet, it is not that simple. An economy may grow, for example,

because money is being spent on clearing up after disasters,

pollution, to control rising crime or widespread disease. You may

also have ‘jobless growth,’ in which the headline figure for GDP

rises but new employment is not generated, or environmentally

destructive growth in which a kind of false monetary value is

created by liquidating irreplaceable natural assets on which

livelihoods depend.

The fact that an economy is growing tells you nothing about the

‘quality’ of economic activity that is happening within it.

Conversely, history shows that in times of recession, life

expectancy can rise, even as livelihoods are apparently harmed. This

happens in rich countries probably due to force of circumstances, as

people become healthier by consuming less and exercising more, using

cheaper, more active forms of transport such as walking and cycling.

It is possible, in other words, to have both ‘economic’ and

‘uneconomic’ growth and we should not assume that growth per se is a

good thing, to be held on to at all costs. |

The growth debate: historical context

There is a kind of reverse political correctness that prevents growth being

debated properly.

Yet this has not always been true. Historically, there

have been vigorous debates on the optimal scale for the economy, which we

survey briefly towards the end of this report (also summarized in Box 2

below).

More familiarly, the 1960s and early 1970s saw a vigorous debate on the

environmental implications of growth. But this was sometimes hampered by

insufficient data. Scientists at the Massachusetts Institute of Technology

(MIT) were commissioned by the

Club of Rome to research and publish the

controversial Limits to growth, which came out in 1972. Since then, the

original report has been successively revised and republished.

Matthew Simmons, founder of the world’s largest energy investment banking

firm, commented on publication of the 2004 update that its message was more

relevant than ever and that we, ‘wasted 30 valuable years of action by

misreading the message of the first book’.13

Originally dismissed and

criticized for ‘crying wolf’, the report has, in fact, stood the test of

time. A study in 2008 by physicist Graham Turner from CSIRO (Commonwealth

Scientific and Industrial Research Organization), Australia’s leading

scientific research institute, compared its original projections with 30

years of subsequent observed trends and data.14 His research showed that

they ‘compared favorably’.

Less well known is that in this fairly recent period, there was also a

significant debate on the desirability of economic growth from the point of

view of social and individual, human well-being.15,16,17 It is disciplines

other than economics that have seemed able to view the issue of growth less

dogmatically, asking difficult questions and making inconvenient

observations, their views apparently less constrained by hardened doctrine.

For example, the implications of ‘doubling’, graphically represented by our

voracious hamster, were addressed in May 2007 by Roderick Smith, Royal

Academy of Engineering Research Professor at Imperial College, London. The

physical view of the economy, he said, ‘is governed by the laws of

thermodynamics and continuity’, and so, ‘the question of how much natural

resource we have to fuel the economy, and how much energy we have to

extract, process and manufacture is central to our existence.’18

Engineers must deal every day with the stuff, the material, the ‘thingyness’

of the world around them, the stresses and strains that make things stand

up, fall down, last or wear out. Because of this, they are perhaps more in

tune with the real world of resources than the economist working with

abstract mathematical simplifications of life.

Hence Smith honed in on one of the economy’s most important characteristics

- its ‘doubling period’, by which its bulk multiplies in proportion to its

current size. Even low growth rates of around 3 per cent, he points out,

lead to ‘surprisingly short doubling times’. Hence, ‘a 3 per cent growth

rate, which is typical of the rate of a developed economy, leads to a

doubling time of just over 23 years. The 10 per cent rates of rapidly

developing economies double the size of the economy in just under 7 years.’

But then, if you are concerned about humanity’s ecological debt, comes what

Smith quaintly calls the ‘real surprise’. Because, according to Smith, ‘each

successive doubling period consumes as much resource as all the previous

doubling periods combined’, just as 8 exceeds the sum of 1, 2 and 4.

Adding,

almost redundantly, as jaws in the room fall open,

‘this little appreciated

fact lies at the heart of why our current economic model is unsustainable.’

Why do economies grow?

We should ask the simple question, why do

economies grow? And, why do people worry that it will be a disaster if they

stop? The answers can be put reasonably simply.

For most countries in much of human history, having more stuff has given

human beings more comfortable lives. Also, as populations have grown, so

have the economies that housed, fed, clothed and kept them.

Yet, there has long been an understanding in the quiet corners of economics,

as well as louder protests in other disciplines, that growth cannot and need

not continue indefinitely.

As John Stuart Mill put it in 1848,

‘the increase

of wealth is not boundless: that at the end of what they term the

progressive state lies the stationary state.’19

The reasons for growth not being ‘boundless’ too, have been long known.

Even

if the modern reader has to make allowances for the time in which Mill

wrote, his meaning remains clear:

‘It is only in the backward countries of the

world that increased production is still an important object: in those

most advanced, what is economically needed is a better distribution.’20

|

Box 2. No-growth economics: a select

chronology of books and papers

In contemplating any

progressive movement, not in its nature unlimited, the mind is not

satisfied with merely tracing the laws of the movement; it cannot

but ask the further question, to what goal? Towards what ultimate

point is society tending by its industrial progress? When the

progress ceases, in what condition are we to expect that it will

leave mankind?

It must always have been seen, more or less distinctly, by political

economists, that the increase of wealth is not boundless: that at

the end of what they term the progressive state lies the stationary

state, that all progress in wealth is but a postponement of this,

and that each step in advance is an approach to it.21

John Stewart Mill, 1848

1821 On the principles of

political economy and taxation (3rd edition) by David Ricardo

(on the ‘Stationary State’)

1848 Principles of political economy by John Stuart Mill (on the

‘Stationary State’, in Book IV, Chapter VI)

1883 Human labour and the unit of energy by Sergei Podolinsky

1922 Cartesian economics by Frederick Soddy

1967 The costs of economic growth by E J Mishan

1971 The entropy law and the economic process by Nicholas

Georgescu-Roegen

1972 Limits to growth: A report for the Club of Rome’s project

on the predicament of mankind by Donella Meadows

1973 Small is beautiful: A study of economics as if people

mattered by E F Schumacher Toward a steady state economy by

Herman E Daly (ed)

1977 The economic growth debate: An assessment by E J Mishan

Social limits to growth by Fred Hirsch

1978 The economic growth debate: Are there limits to growth? By

Lawrence Pringle

1982 Overshoot by William R Catton

1987 Our common future by the World Commission on Environment

and Development

1989 Beyond the limits to growth: A report to the Club of Rome

by Eduard Pestel

1992 The growth illusion: How economic growth has enriched the

few, impoverished the many, and endangered the planet by Richard

Douthwaite and Edward Goldsmith

1995 Our ecological footprint: Reducing human impact on the

Earth by William Rees and Mathis Wackernagel

1996 Beyond growth by Herman E Daly

1997 Sustainable development: Prosperity without growth by

Michael J Kinsley

2004 Limits to growth: The 30 year update by Donella Meadows,

Jorgen Randers and Dennis Meadows Growth fetish by Clive

Hamilton

2005 Ecological debt: The health of the planet and the wealth of

nations by Andrew Simms

2006 Growth isn’t working: The unbalanced distribution of

benefits and costs from economic growth by David Woodward and

Andrew Simms

2008 Managing without growth by Peter Victor

2009 Prosperity without growth by Tim Jackson

2010 Growth isn’t possible by Andrew Simms, Victoria Johnson and

Peter Chowla.

|

So why is it, that over 160 years after Mill wrote those words, rich nations

are more obsessed than ever with economic growth?

Countries like the UK are decades past the point where increases in national

income, measured by GNP and GDP lead to similar increases in human

well-being and life expectancy.22 Yet no mainstream politician argues

against the need for economic growth.

The reasons are partly to do with policy habits, partly political posturing,

and partly because we have set our economic system up in such a way that it

has become addicted to growth.

Growth-based national accounting became popular in the 1930s as a guide to

quantify the value of government interventions to rescue economies from the

depression, and also later as a tool to aid increased production as part of

the war planning effort. But the new measurement came with a very big health

warning attached.

One of the indicator’s key architects, the economist Simon Kuznets, was

explicit about its limitations. Growth did not measure quality of life, he

made clear, and it excluded vast and important parts of the economy where

exchanges were not monetary. By this he meant, family, care and community

work - the so-called ‘core economy’ which makes society function and

civilization possible.23

So, for example, if the money economy grows at the

expense of, and by cannibalizing the services of the core economy - such as

in the way that profit driven supermarkets grow at the expense of

communities - it is a kind of false growth. Similarly if the money economy

grows simply by liquidating natural assets that are treated as ‘free income’

this, too, is a kind of ‘uneconomic growth’.

Also, it was repeatedly observed that growth in aggregate national income

couldn’t tell you anything about the nature of the economy, whether activity

was good or bad. Spending on prisons, pollution and disasters pushed up GDP

just as surely as spending on schools, hospitals and parks. But growth

nevertheless became the eclipsing indicator of an economy’s virility and

success.

Even though, in 1968, Robert Kennedy pointed out that growth

measured everything apart from ‘that which makes life worthwhile’.24

The problem with our economic system is now threefold. First, governments

plan their expenditure assuming that the economy will keep growing. If it

then didn’t grow, there would be shortfalls in government income with

repercussions for public spending. The same is true for all of us; for

example, when we plan for old age by putting our savings into pensions.

Today, though, many economies like the UK are facing this problem in any

case. Ironically, however, it comes as a direct consequence of the economic

damage caused by the behavior of weakly regulated banks, which were busy

chasing maximum rates of growth through financial speculation.

Secondly, neo-liberal economies typically put legal obligations on publicly

listed companies to grow. They make the maximization of returns to

shareholders the highest priority for management.

As major investors are

generally footloose, they are free to take their money wherever the highest

rates of return and growth are found.

|

Box 3. Climate change is not the only

limit

This report focuses mainly on

how the need to preserve a climate system that is conducive to human

society puts a limit on orthodox economic growth. But climate change

is not the only natural parameter. Other limits of our biocapacity

also need respecting if we are to maintain humanity’s environmental

life support system. Two important areas of research, described

below, provide examples of attempts to define some of those limits

and raise questions for economists and policy makers.

The Ecological Footprint 25

From a methodology first developed by the Canadian geographer

William Rees in the early 1980s, the ecological footprint is now a

well-established technique being constantly refined as available

data and understanding of ecosystems improves. It compares the

biocapacity available to provide, for example, farmland, fisheries

and forestry, as well as to absorb waste from human economic

activity, with the rate at which humanity consumes those resources

and produces waste, for example in the form of greenhouse gas

emissions.

The 2009 set of Global Footprint Accounts reveal that the human

population is demanding nature’s services, using resources and

generating CO2 emissions, at a rate that is 44 per cent faster than

what nature can replace and reabsorb. That means it takes the Earth

just under 18 months to produce the ecological services humanity

needs in one year. Very conservatively, for the whole world to

consume and produce waste at the level of an average person in the

United Kingdom, we would need the equivalent of at least 3.4 planets

like earth. Most worryingly there are signs that available

biocapacity is actually reducing, being worn out, by current levels

of overuse, setting up a negative spiral of over-consumption and

weakening capacity to provide.

Planetary boundaries

A much more recent approach, published in science journal Nature in

September 2009, uses the notion of ‘planetary boundaries.’26 The

work, co-authored by 29 leading international scientists, identifies

nine processes in the biosphere for which the researchers considered

it necessary to ‘define planetary boundaries’.

They are:

-

climate change

-

rate of biodiversity loss

(terrestrial and marine)

-

interference with the

nitrogen and phosphorus cycles

-

stratospheric ozone

depletion

-

ocean acidification

-

global freshwater use

-

change in land use

-

chemical pollution

-

atmospheric aerosol loading

Of these nine, the authors

found that three boundaries had already been transgressed: climate

change, interference with the nitrogen cycle, and biodiversity loss

(see Table 1).

Setting boundaries is complex. Earth systems change and react in

often non-linear ways. The erosion or overburdening of one system

can affect the behavior and resilience of another. As the research

points out, ‘If one boundary is transgressed, then other boundaries

are also under serious risk. For instance, significant land-use

changes in the Amazon could influence water resources as far away as

Tibet.’

Nevertheless, and even though with caveats, the authors identify

boundaries for seven of the nine processes leaving the safe

thresholds for atmospheric aerosol loading and chemical pollution

still ‘to be identified.’

The work on planetary boundaries complements (although unusually

doesn’t reference) the ecological footprint method. The latter, due

to a lack of previous research on safe rates of harvest and waste

dumping, merely produces a best assessment of full available

biocapacity and compares it to human rates of consumption and waste

generation. This conservatively, or rather generously, creates the

impression that all biocapacity might be available for human use.

The attempt to define more nuanced planetary boundaries concerning

different earth systems, is set to produce more realistic, and

almost inevitably smaller assessments of the share of the earth’s

resources and services available for safe human economic use.

Table 1.

Identifying planetary

boundaries that should not be crossed.

Limits for earth processes in

grey have already been transgressed.

|

Thirdly, in the modern world, money is lent into existence by banks with

interest rates attached.

Because for every pound, dollar, yen or Euro

borrowed, more must be paid back, economies that function largely on

interest-bearing money have a built-in growth dynamic.

The problem extends beyond the economy. Our increasingly consumerist society

demands ever higher consumption to demonstrate social status - conspicuous

consumption.27

To see how advanced, industrialized nations might escape from

a locked-in growth dynamic, see the conclusion to this report.

First principles - the laws of thermodynamics

The first law says you can’t win, the

second law says you can’t even break even

C.P. Snow

The physicist and novelist C.P. Snow became

famous for trying to bridge the gap between the ‘two cultures’, science and

the arts. When he described the alleged division, he made reference to the

failure of those in the humanities to understand the Second Law of

Thermodynamics.

While delivering The Rede Lecture in 1959, Snow observed,

‘Once or twice I have been provoked and have asked the company how many of

them could describe the Second Law of Thermodynamics. The response was cold:

it was also negative. Yet I was asking something which is about the

scientific equivalent of: “Have you read a work of Shakespeare’s?” ’ 28

Yet, 50 years after delivering his lecture, while scientists are still

thought to be illiterate if they haven’t read Shakespeare, how many experts

in the arts would be able to explain the laws of thermodynamics?

This is not

simple point-scoring between disciplines. Politicians and civil servants

tend to be drawn from the fields of economics, politics, history and the

arts.29 This could go some way to explain why, on one level, the mainstream

political and economic establishment have little comprehension about the

finiteness of the planet’s resources and the limits to efficiency.

One representative from a conservative economic think tank was questioned on

where the resources to fuel infinite economic growth would come from. It was

at a public debate in the Dana Centre, part of the Science Museum in London.

After thinking for a moment, his answer was

confidently asserted,

‘We could mine asteroids,’ he said.

The First Law

The First Law of Thermodynamics,

formalized by nineteenth-century German physicist, Rudolf Clausius, is a

generalization of the universal law of energy conservation.30 The First

Law states that within a closed system, energy can neither be created

nor destroyed.

For example, energy within the Universe is constant, or

the amount of energy lost in a steady-state process cannot be greater

than the amount of energy gained. Thus, a measure of heat transferred

into a system will result in an increase in temperature and in the

system’s ability to do work.

The Second Law

The Second Law of Thermodynamics applies a direction to the conservation

of energy described by the First Law. It says that not all heat input

into a system can be converted into useful work.

Put simply,

transferring heat into work with 100 per cent efficiency is impossible.

Some heat will always escape into the surrounding environment as wasted

energy. Ultimately, therefore, all energy tends to heat or disorder

(entropy) and no transaction of energy from one type to another is

completely reversible.

Because the laws of thermodynamics imply that entropy will always

increase, Clausius imagined that sometime in the distant future - the

universe would eventually fall fate to a ‘heat death’. Entropy will have

increased to its maximum level and no more work could be done.

As entropy increases - ‘free energy’ or exergy decreases. This describes

the maximum useful work obtainable from an energy system at a given

state in a specified environment.

In other words, it represents the

thermodynamic ‘quality’ of an energy carrier based on the Second Law.

For example, electricity has a high degree of exergy and is widely

regarded as an efficient carrier of energy. Low-temperature hot water,

however, has low exergy and whilst it is also a carrier of energy, can

generally only be used for heating purposes.

According to the second law of thermodynamics, order (sometimes called

negative-entropy, neg-entropy) can be increased only at the expense of

generating more disorder (entropy) elsewhere.31 This means importantly

that human-created order - the emergence of structured civilization and

latterly that of advanced industrialized society - will also result in

large quantities of entropy in/on the surrounding environment.32

From this, the potential for environmental damage from economic activity

becomes clear. Industrial activities cannot continue without energy, nor

can they be generated without some environmental impact.

This observation was the basis of Herman Daly’s ‘steady-state economy’

which, building on the work of economist Nicholas Georgescu-Roegen,

challenges humanity’s failure to notice the entropic nature of the

economic process (although, it is more fairly, a specific a failure of

mainstream economics).33, 34

While the Second Law means that energy efficiency in any process can

never in reality be 100 per cent, the practical limits of energy

efficiency approached in the real world is much lower.

This is discussed

in more detail later on in the report.

Why the ‘unthinkable’ must be debated

The meaning of sustainability has been blurred since the flurry of activity

that led up to the United Nation’s 1992 Earth Summit in Brazil. Today it is

applied as much to merely sustaining economic growth as it is to preserving

a livable planet for future generations.

This mainstream view of sustainable development is quite different from

definitions of so-called ‘strong sustainability’ (Box 4 below). The ‘mainstream’

view tends to emphasize decoupling economic growth from environmental

degradation (including climate change). And, to drive that dynamic it relies

heavily on market-based initiatives - the ‘ecological modernization’ of the

economy, defined by German sociologist Joseph Huber as a twin process of

‘ecologising the economy’ and ‘economising ecology’.35

Ecological modernization assumes that already existing political, economic

and social institutions can adequately deal with environmental problems - focusing, almost exclusively on industrialism, with much less consideration

(if any at all) being given to the accumulative process of capitalism,

military power or the nation-sate system, even though all contribute in

different ways to environmental degradation by being instrumental to growth

and international competitiveness.36

Policies of environmental or ecological modernization include: the ‘polluter

pays’ principle, eco-taxes, government purchasing initiatives, consumer

education campaigns and instituting voluntary eco-labeling schemes. Such a

strategy relies on small acts of individual consumer sovereignty

(sustainable consumption) to change the market.37

The growing emphasis on

the individual to practice sustainable consumption as a cure-all, however,

is awkwardly juxtaposed against the systemic nature of the problems. There

is now a growing view and body of evidence that ecological modernization has

not been effective in reducing carbon emissions. In fact, some would argue

it has acted in the opposite direction, driving emissions upwards.

Environmental debates, therefore, seem caught between paralyzing catastrophe

scenarios, and ill-thought-out technological optimism. We are told that

either the planet would like to see the back of us, or that we can have the

planet and eat it. The truth, as ever, is more complex and interesting.

The point of this report, Growth isn’t possible, is to remove an obstacle to

exploring the possibilities in that more nuanced reality.

Mainstream

economics is frozen in its one-eyed obsession with growth. Across the

political spectrum of governments, pursuing international competitiveness

and a rising GDP is still seen as panacea for social, economic and

environmental problems. Unfortunately, a combination of the science of

climate change, natural resource accounting, economic realities and the laws

of physics tell us that this assertion has become quite detached from

reality.

Our earlier report, Growth isn’t working, showed that global

economic growth is a very inefficient way to reduce poverty, and is becoming

even less so.

Why growth isn’t working

Between 1990 and 2001, for every $100

worth of growth in the world’s income per person, just $0.60, down from

$2.20 the previous decade, found its target and contributed to reducing

poverty below the $1-a-day line.38

A single dollar of poverty reduction took

$166 of additional global production and consumption, with all its

associated environmental impacts. It created the paradox that ever smaller

amounts of poverty reduction amongst the poorest people of the world

required ever larger amounts of conspicuous consumption by the rich.

Growth wasn’t (and still isn’t) working.39 Yet, so deeply engrained is the

commitment to growth, that to question it is treated as a challenge to the

whole exercise of economics. Nothing could be further from the truth. This

report is a companion volume to nef’s earlier and ongoing research. It is

written in the hope that we can begin to look at the fascinating

opportunities for economics that lie beyond the doctrine - it could be

called dogma - of growth.

One of the few modern economists to have imagined such possibilities in any

depth is Herman Daly.40

The kind of approach called for in a world

constrained by fuzzy but fundamental limits to its biocapacity is one,

according to Daly, that is:

‘…a subtle and complex economics of

maintenance, qualitative improvements, sharing frugality, and adaptation

to natural limits. It is an economics of better, not bigger’.41

|

Box 4. Sustainable development?

Civil servant and environmental

economist, Michael Jacobs described six core ideals and themes

within sustainable development.

These include:42

-

The integration of the

economy and environment: economic decisions to have regard to

their environmental consequences

-

Intergenerational

obligation: current decisions and practices to take account of

their effect on future generations

-

Social justice: all people

have the equal right to an environment in which they can

flourish (or have their basic human needs met)

-

Environmental protection:

conservation of resources and protection of the non-human world

-

Quality of life: a wider

definition of human well-being beyond narrowly defined economic

prosperity

-

Participation: institutions

to be restructured to allow all voices to be heard in

decision-making (procedural justice)

The core ideals cover three

fields - the environment, economy and society - the three pillars of

sustainability. A view of sustainable development that encompasses

all three dimensions can be defined as ‘strong sustainability’.

According to Andrew Dobson, Professor of Politics at Keele

University, ‘strong sustainability’ will require, ‘radical changes

in our relation with the non-human natural world, and in our mode of

social and political life’.43 |

Relying on the wished-for trickle-down of income from global growth as the

main economic strategy to meet human needs, maximize well-being and achieve

poverty reduction appears ineffective, frequently counter-productive and is

in all practical senses, impossible.

Given current, highly unequal patterns of the distribution of benefits from

growth, to get everyone in the world onto an income of at least $3 per day - the level around which income stops having an extreme effect on life

expectancy - implies, bizarrely, the need for 15 planets’ worth of resources

to sustain the requisite growth. Even then, environmental costs would fall

disproportionately, and counter-productively, on the poorest - the very

people the growth is meant to benefit.44

So, globally, including in relatively rich countries, there is a danger of

locking in a self-defeating spiral of over-consumption by those who are

already wealthy, justified against achieving marginal increases in wealth

amongst the poorest members of society.

Another assault on the doctrine of growth stems from the large but still

emerging field of studying life-satisfaction and human well-being. It

presents a critique of how, in industrialized countries, patterns of work

and rising consumption are promoted and pursued that repeatedly fail to

deliver the expected gains in life satisfaction. At the same time, these

patterns of (over)work potentially erode current well-being by undermining

family relationships and the time needed for personal development.45

The assumption that by increasing efficiency, whether it is energy

efficiency or resource efficiency, will allow us to continue along the same,

ever expanding consumption path is wrong. It does, however, allow us to

skirt around the bigger issue relating to work-and-spend lifestyles that

developed nations have become so accustomed to, and which are

unquestioningly assumed to be the correct and best development models for

developing nations.

In fact, a growing body of literature shows that once people have enough to

meet their basic needs and are able to survive with reasonable comfort,

higher levels of consumption do not tend to translate into higher levels of

life satisfaction, or well-being.46

Instead, people tend to adapt relatively

quickly to improvements in their material standard of living, and soon

return to their prior level of life satisfaction. This is known as becoming

trapped on the ‘hedonic treadmill’, whereby ever higher levels of

consumption are sought in the belief that they will lead to a better life,

whilst simultaneously changing expectations leave people in effect having to

‘run faster’, consuming more, merely to stand still.

National trends in subjective life satisfaction (an important predictor of

other hard, quantitative indicators such as health) stay stubbornly flat

once a fairly low level of GDP per capita is reached.47 And, importantly,

only around 10 per cent of the variation in subjective happiness observed in

western populations is attributable to differences in actual material

circumstances, such as income and possessions.48

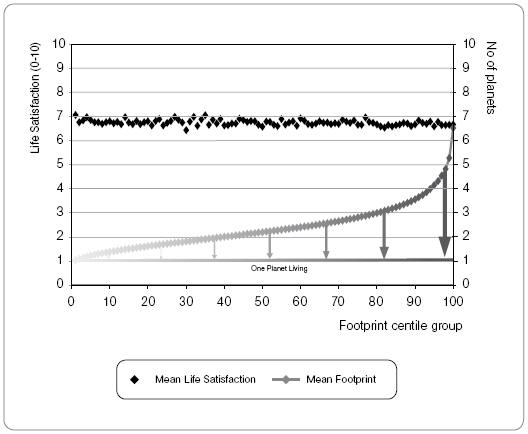

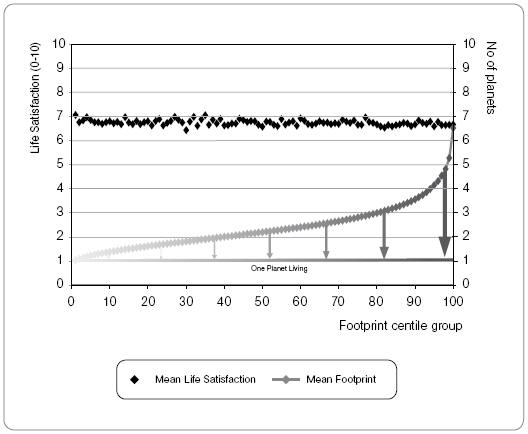

Figure 1 shows the results of an online survey of life satisfaction and

consumption in Europe, gathered by nef. The web-based survey contained

questions about lifestyle - consumption patterns, diet, health, family

history - as well as subjective life satisfaction. Using this data,

estimates of footprint and life expectancy could be calculated.

Over 35,000

people in Europe completed the survey.

Figure 1:

Life satisfaction

compared to levels of material consumption in Europe.49

The blue line represents the distribution of

ecological footprints across the total sample, expressed in terms of the

number of planets’ worth of resources that would be required if everyone on

the planet were to live the same way.

To the right end of the distribution

are those people with high consumption lifestyles, approaching ‘seven planet

living’. To the left are those whose lifestyles have the least environmental

impact, approaching the planetary fair share ‘one planet living’.

The arrows

depict the nature of the transition that is required both to level and lower

the consumption playing field towards equitable and sustainable use of the

Earth’s resources.

This data represents both a challenge and an opportunity. It is challenging

because it shows starkly the extent of European over-use of planetary

resources. Not only is the distribution of footprint extremely unequal in

this sample, it is also far too high in absolute terms.

But, Figure 1 also

suggests that well-being has little to do with consumption; which, in turn,

allows for the possibility that our collective footprint could be reduced

significantly without leading to widespread loss in well-being.

As one

analyst put it, an initial reduction in energy use of around one-quarter

‘would call for nothing more than a return to levels that prevailed just a

decade or no more than a generation ago’, adding rhetorically:

‘How could

one even use the term sacrifice in this connection? Did we live so

unbearably 10 or 30 years ago that the return to those consumption levels

cannot be even publicly contemplated by serious policymakers?’58

|

Box 5. Life rage

Economic growth is indeed

triumphant, but to no point. For material prosperity does not

make humans happier: the ‘triumph of economic growth’ is not a

triumph of humanity over material wants; rather it is the

triumph of material wants over humanity.50

Professor Richard

Layard, London School of Economics

Studies over the past decade,

using both qualitative and quantitative methods, reveal levels of

anger and moral anxiety about changes in society that were not

apparent 30 years ago.51 Whilst these studies mainly focused on the

UK, the USA and Australia, the findings are, to varying degrees,

applicable to other high-consuming industrialized nations. In other

words, our levels of well-being are being eroded. But why?

Research shows that the strong relationship between life expectancy

and income levels-off at a remarkably low level. The influence of

rising income on life satisfaction levels-off at higher levels, but

not much higher.52,53 Life expectancy continues to rise in most

countries and this is only partly due to greater wealth; happiness

has not increased in recent decades in rich nations, despite on

average, people have become much wealthier.54

Social epidemiologist, Professor Richard Wilkinson argues in his

book Impact of inequality: how to make sick societies healthier that

poorer nations with lower wealth inequality tend to have higher

levels of well-being (physical and mental) than more wealthy but

more unequal nations.55

For example, life expectancy in rich nations

shows a strong correlation with relative equality. His more recent

work with co-author Professor Kate Pickett, The Spirit Level, makes

an even stronger case.56 Here they demonstrate that more equal

societies almost always do better against a wide range of social and

environmental indicators.

In Impact of inequality Wilkinson compared various social indicators

in Greece to those in the USA. He found that while Greece has almost

half the per capita GDP, citizens have a longer life expectancy than

the USA. While globally, the USA is the wealthiest nation, it has

one of the highest levels inequality and lowest life expectancy in

the global North.

Furthermore, Wilkinson demonstrates that crime

rates are most strongly correlated to a nation’s level of

inequality, rather than its aggregated wealth. Given this, Wilkinson

concludes that the most equal countries tend to have the highest

levels of trust and social capital.

As Nicholas Georgescu-Roegen, one of the fathers of ecological

economics argues, as we have become caught up in our obsession with

consumption and material throughput, we have failed to recognise the

‘immaterial flux of the enjoyment of life’.57 |

Despite this, high-consuming lifestyles seem ‘locked-in’ by our economic,

technological and cultural context, which fails to address equality and

instead drives relative poverty.

As the gap between the ‘haves’ and

‘have-nots’ widens, there tends to be a concomitant loss of life

satisfaction, sense of community and, ultimately, a rise in social

disequilibrium.

For example, in an update to the infamous Whitehall Study led by Professor

Michael Marmot at the Department of Epidemiology and Public Health at

University College London, researchers found that subjective socio-economic

status was a better predictor of health status and decline in health status

over time than more objective measures.59,60

This work implies the health impacts of relative poverty are more likely to

be determined by an individual’s perception of his or her socio-economic

status than, beyond a certain level of sufficient consumption, their actual

socio-economic circumstances.

Therefore perceived socio-economic barriers

can act as a barrier to progressive improvements in overall well-being, as

the physical and mental well-being of those in the lowest strata is

undermined, creating domino effects throughout society.

There are questions to be asked of growth, of its science-based limits, and

more generally of its effectiveness today in meeting human needs and

maximizing well-being. This report suggests that we are reaching the point

at which the doctrine of global economic growth as a central policy

objective and primary strategy for meeting society’s various needs is

becoming redundant.

Later in this report we will argue that focusing only on improvements in

carbon and energy intensity of the economy, as a strategy to combat climate

change, means only that we are buying time, and even then very little. In a

best-case scenario, delaying arrival at critical concentrations of

greenhouse gases by 10-20 years, and in a worst-case scenario, not delaying

at all.

So let us first address the question, what is, and what should be

accepted as ‘safe’ levels of greenhouse gases in the atmosphere?

Back to Contents

Greenhouse gas

emissions and current climate change

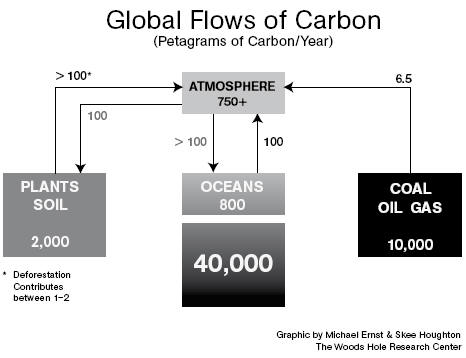

The Earth’s climate system is currently changing at greater rates and in

patterns that are beyond the characteristics of natural variation.

The

concentration of carbon dioxide (CO2) in the atmosphere today, the most

prevalent anthropogenic greenhouse gas, far exceeds the natural range of

180-300 ppm. The present concentration is the highest during the last

800,000 years and probably during the last 20 million years.61,62,63

In the space of just 250 years, as a result of the Industrial Revolution and

changes to land use, such as the growth of cities and the felling of

forests, we have released cumulatively more than 1800 gigatonnes (Gt) of CO2

into the atmosphere.64 Global atmospheric concentrations of CO2 are now a

record 390 ppm, almost 40 per cent higher than they were at the beginning of

the Industrial Revolution.65, 66

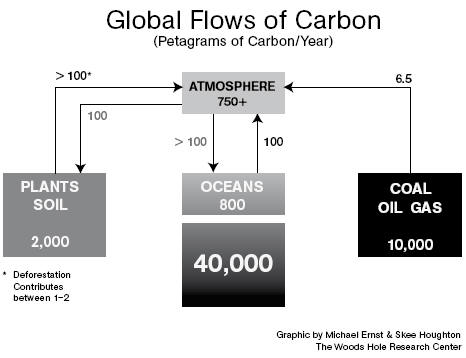

The primary source of the increased concentration of CO2 is unequivocally

due to the burning of fossil fuels such as coal, oil, and natural gas.67

Annual fossil fuel CO2 emissions have increased year on year from an average

of 23.4 Gt CO2 per year in the 1990s to 30 Gt CO2 per year today.

To put this

in perspective, the increase in annual emissions over the past 20 years is

almost double the total emissions produced by EU27 nations each year.68

Changes in land use have also contributed significantly to increasing rates

of CO2 emissions, contributing around 5.5 Gt CO2 per year to the atmosphere.

We now release just over 1000 tonnes of CO2 into the Earth’s atmosphere

every second.

In 2007, the Intergovernmental Panel on Climate Change (IPCC) Fourth

Assessment Report - a synthesis of peer-reviewed research on climate change,

its causes and effects (including socio-economic consequences) involving

over 2500 scientists worldwide - stated that if fossil fuels continued to be

burnt at the current rate, global average surface temperatures could rise by

4°C by the end of the century, with an uncertainty range of 2.4-6.4°C.69

A more recent study published in the American science journal Proceedings of

the National Academy of Sciences found that the ‘committed’ level of warming

by the end of the century is 2.4°C (1.4-4.3°C) - if atmospheric

concentrations of greenhouse gases are held at 2005 levels. This value is

based on past emissions and includes the warming already observed of 0.76°C

plus 1.6°C of additional warming which is yet to occur due to the thermal

inertia of the climate system and the ‘masking’ by cooling aerosols.70

Although 2008 may have been the coolest year of the current decade, it was

still the tenth warmest year since instrumental records began in 1850.71

While observations actually suggest that global temperature rise has slowed

during the last decade, analyses of observations and modeling studies show

that this is due to internal climate variability and that the warming trend

will resume in the next few years.72,73

One of the studies by atmospheric scientists Professors Kyle Swanson and

Anastasios Tsonis ends with the following cautionary note:

‘…there is no

comfort to be gained by having a climate with a significant degree of

internal variability, even if it results in a near-term cessation of global

warming…If the role of internal variability in the climate system is as

large as this analysis would suggest, warming over the 21st century may well

be larger than that predicted by the current generation of models’.74

Indeed, over the course of 2008 and 2009 numerous scientific papers were

published revealing that climate change was far more serious even than

reported in the most recent review of the science by the IPCC.75,76

The

long-term warming trend has had a large impact on mountain glaciers and snow

cover worldwide, and also changes in rainfall patterns and intensity, ocean

salinity, wind patterns and aspects of extreme weather including droughts,

heavy precipitation, heat waves and the intensity of tropical cyclones. Such

changes to the biophysical world are already having harmful impacts on

society, which will worsen with time.

As Professor Stefan Rahmstorf of the Potsdam Institute for Climate Impact

Research reflected in 2007:

‘As climatologists, we’re often under fire

because of our pessimistic message, and we’re accused of overestimating the

problem… But I think the evidence points to the opposite - we may have been

underestimating it.’77

Two years on, at International Scientific Congress on

Climate Change in March 2009, Rahmstorf confirmed this view.

‘What we are

seeing now is that some aspects are worse than expected’, he said speaking

at a plenary session of the Congress. He continued: ‘I’m frustrated, as are

many of my colleagues, that 30 years after the US National Academies of

Science issued a strong warning on CO2 warming, the full urgency of this

problem hasn’t dawned on politicians and the general public.’78

Dangerous climate change

Science on its own cannot give us the answer to the question of how much

climate change is too much.

Margaret Beckett speaking at the Avoiding Dangerous Climate Change

Conference (February 2005)

Margaret Beckett’s comments highlight the ethical and political dilemma of

what constitutes a tolerable degree of climate change. Science can tell us

what may happen as the temperature rises, but only we can decide what is

tolerable and how far climate change should be allowed to go.

The United Nations Framework Convention on Climate Change (UNFCCC) was

signed by over 160 countries at the United Nations Conference on Environment

and Development held in Rio de Janeiro in June 1992, and came into force in

1994. The objective of the Convention was to slow and stabilize climate

change by establishing an overall framework for intergovernmental efforts to

respond to climate change.

It recognizes the significance of climate change

and the uncertainties associated with future projections. But it also states

that despite uncertainties, mitigating action should be taken - namely a ‘no

regrets’ approach. Furthermore, it recognizes the responsibility of

developed nations to take the lead due to their historical emissions, and

therefore responsibility.

The long-term objective of the Convention, outlined in Article 2, is to

achieve:

…stabilization of greenhouse gas concentrations in the atmosphere at a level

that would prevent dangerous anthropogenic interference with the climate

system. Such a level should be achieved within a time frame sufficient to

allow ecosystems to adapt naturally to climate change, to ensure that food

production is not threatened and to enable economic development to proceed

in a sustainable manner.79

The burning embers diagram

An important part of the international climate change debate relates to the

interpretation of dangerous climate change. This is of growing importance

and of particular relevance to post-Kyoto negotiations.

In order to codify what ‘dangerous anthropogenic interference’ might mean,

authors of the Third Assessment Report of the IPCC identified ‘five reasons

for concern’.

These are listed below:80

-

Risks to unique and threatened systems - e.g., coral reefs, tropical glaciers, endangered species, unique

ecosystems, biodiversity hotspots, small island states and

indigenous communities.

-

Risk of extreme weather events - e.g.,

the frequency and intensity, or consequences of heat waves, floods,

droughts, wildfires, or tropical cyclones.

-

Distribution of impacts - some regions,

countries and populations are more at risk from climate change than

others.

-

Aggregate impacts - e.g., the

aggregation of impacts into a single metric such as monetary

damages, lives affected or lost.

-

Risks of large scale discontinuities - e.g., tipping points within the climate system such as partial or

complete collapse of the West Antarctic or Greenland ice sheet, or

collapse/reduction in the North Atlantic Overturning Circulation.

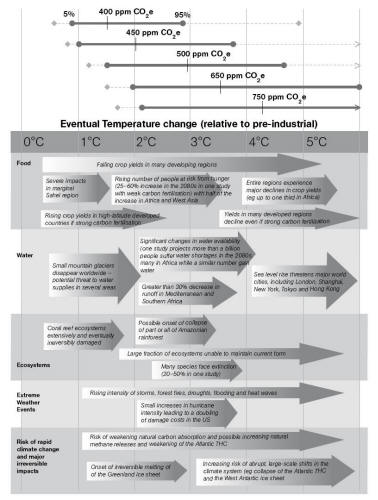

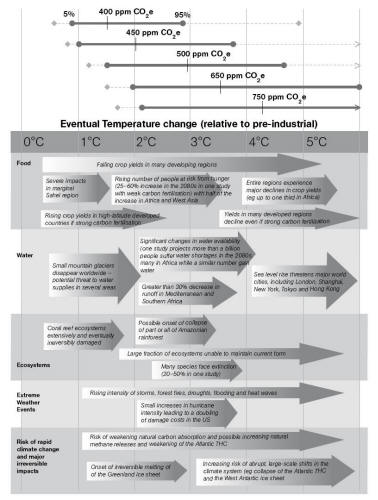

Figure 2, also known as the ‘burning embers

diagram’ is an illustration of the IPCC’s five reasons for concern.

It shows that the most potentially serious

climate change impacts (arrow heads) - expected to be experienced due to a

range of equilibrium warming temperatures projected from stabilization

levels between 400 ppm and 750 ppm of carbon dioxide equivalent (CO2e)

- typically occur after only a few degrees of warming.81

In April 2009, a team of researchers, many of whom were lead authors of the

most recent IPCC report, revised the burning embers diagram. While the

diagram was rejected from the IPCC’s Forth Assessment Report because the

artwork was also thought to be too unnerving, it was later published it in

the peer-reviewed journal Proceedings of the National Academy of Sciences.

The updated diagram showed that an even smaller

increase in global average surface temperature could lead to significant

consequences for all five elements in the ‘reasons for concern’ framework.82

Figure 2:

Burning embers diagram

83

The solid horizontal lines indicate the 5-95 per cent range based on climate

sensitivity

estimates from the IPCC 2001

and a study by one of the UK’s leading climate research unit, Hadley Centre

study.84

The vertical line indicates

the mean of the 50th per centile point.

The dashed lines show the

5-95 per cent range based on 11 recent studies.85

The bottom panel illustrates

the range of impacts expected at different levels of warming.

|

Box 6: Sea-level rise

Rising sea levels will be one

of the most significant impacts of climate change over the next

century. This is because coastal zones are home to a significant

proportion of humanity. These regions often have average population

densities three times the global mean density.86

Tidal gauge and satellite data shows a global average sea-level rise

of 1.8mm per year between 1961 and 2003.87 In recent years, however,

this rate has increased to around 3.3 ± 0.4mm per year over the

period 1993 to 2006.88 This observation is 40 per cent above the IPCC projected best-estimate rise of less than 2mm per year.

The main contribution to rising sea levels has been through thermal

expansion of the oceans, but also a contribution from melting

land-based ice (e.g. glaciers, and the Greenland and Antarctic ice

sheets).

Due to a number of uncertainties about the way that ice-sheets

behave, an accurate picture of future sea level rise is difficult to

predict. Nevertheless, melt-water from Antarctica, Greenland and

small ice caps could lead to a global sea level rise (the mean value

of local sea level taken across the ocean) of between 0.75-2 m by

the end of the century.89,90,91

However, recent research published by NASA’s James Hansen and a team

of researchers warned that destabilization of the Greenland ice

sheet is possible before global surface temperatures reach 2°C.92,93

This could lead to a sea-level rise of seven meters or more. While

this rise may occur over a number of centuries, a mechanism of

‘albedo-flip’ could result in a much more rapid sea-level rise.94

The albedo-flip is a key feedback mechanism on large ice sheets, and

occurs when snow and ice begin to melt. While snow cover has a high

albedo (i.e. reflects back to space most of the sunlight striking

it), melting ‘wet’ ice is darker and absorbs much more sunlight. A

proportion of the melt water burrows through the ice sheet and

lubricates its base, accelerating the release of icebergs to the

ocean.

Such an extreme rise in sea level would have catastrophic

implications for humanity. For example one study estimates that

currently roughly 410 million people (or about 8 per cent of global

population) live within five meters of present high tide.95

Allowing

for population growth, this figure could well double over the course

of the twenty-first century. Densely-populated Nile and Asian

‘mega-deltas’ may disappear in addition to large areas around the

southern North Sea. |

Aiming for 2°C

Historically, an increase in equilibrium temperature of Earth’s atmosphere

by 2°C has been considered a ‘safe’ level of warming.

James Hansen’s warning

that global temperatures should not be allowed to exceed 1.7°C, however,

strongly suggests that a warming of 2°C cannot be described as ‘safe’.

As

Professor Rahmstorf says:

‘If we look at all of the impacts, we’ll probably

decide that two degrees is a compromise number, but it’s probably the best

we can hope for’.

In 2007, NASA’s James Hansen argued in 2007 that temperatures should not go

beyond 1.7°C (or 1°C above 2000 temperatures) if we are to avoid aiming to

avoid practically irreversible ice sheet and species loss.96

For example,

collapse of the Greenland ice sheet is more than likely to be triggered by a

local warming of 2.7°C, which could correspond to a global mean temperature

increase of 2°C or less.97, 98 The disintegration of the Greenland ice sheet

could correspond to a sea-level rise by up to 7m in the next 1000 years, not

to mention the positive climate feedback effects due to changes in

land-surface reflective properties (see Box 6).

This would act to increase

the warming as darker surfaces absorb more heat. Coral reef, alpine and

Arctic ecosystems will also potentially face irreversible damage below a

global average surface temperature rise of 2°C.99

In terms of the social impacts of climate change, what is manageable for

some is actually catastrophic for others. For example, at the climate change

conference in Copenhagen in late 2009, the Alliance of Small Island States - a grouping of 43 of the smallest and most vulnerable countries

- rejected

the 2°C target. They argued that 1.5°C is a better target, as many of their

islands will disappear with warming beyond this point.100

Climate policy, therefore, needs to redefine what is described as a ‘safe’

level of warming or redefine its definitions from an acceptable level of

warming decided by those who bear the least impact. Additionally, recent

research (see Box 10) shows that real temperature outcomes are unlikely to

be related to concentrations of greenhouse gases but rather a cumulative

carbon budget.101,102

In other words, not only is 2°C unsafe, it is

unhelpful when defining targets for climate policy.

But, given that a 2°C target is now firmly established within the policy

context, it is worth examining what it will mean should this temperature be

exceeded.

The inter-agency report Two degrees, one chance published by Tearfund, Oxfam, Practical Action, Christian Aid states:

Once temperature increase rises above 2°C up to 4 billion people could be

experiencing growing water shortages. Agriculture will cease to be viable in

parts of the world and millions will be at risk of hunger. The rise in

temperature could see 40-60 million more people exposed to malaria in

Africa. The threshold for the melting of the Greenland ice-sheet is likely to

have been passed and sea-level rise will accelerate.

Above 2°C lies the

greater danger of ‘tipping points’ for soil carbon release and the collapse

of the Amazon rainforest.103

Abrupt climate change: tipping points in the climate system

The Earth’s geological history is full of examples of abrupt climate change,

when the climate system has undergone upheaval, shifting from one relatively

stable state to another.

Transition to a new state is triggered when a

critical threshold is crossed. When this happens, the rate of change becomes

determined by the climate system itself, occurring at faster rate than the

original forcing. For example, until 6000 years ago the Sahara Desert was a

covered by vegetation and wetlands.

While the transition was driven by

subtle and smooth changes in incoming solar radiation, at a critical point

there was a regime shift in the rainfall patterns causing the landscape to

switch from lush vegetation to desert, at a rate far greater than the

original solar forcing.104

In 2008, Tim Lenton, Professor of Earth System Science and a team of

researchers at the University of East Anglia, concluded that because of

these critical thresholds in the climate system ‘society may have been

lulled into a false sense of security’ by the projections of apparently

‘smooth’ climate change.105

The research suggested that that a variety of

tipping elements of the climate system, such as the melting of ice sheets or

permafrost could reach their critical point (tipping point) within this

century under current emission trajectories. Tipping elements describe

subsystems of the Earth’s system that are at least sub-continental in scale

and can be switched - under certain circumstances - into a qualitatively

different state by small perturbations. The tipping point is the

corresponding critical point.

Tipping elements identified by the study include: collapse of the Greenland

ice sheet; drying of the Amazon rainforest; collapse of the West Antarctic

ice sheet; dieback of Boreal forests; greening of the Sahara/Sahel due to a

shift in the West African monsoon regime; collapse of the North Atlantic

ocean circulation; and changes to the El Niño-Southern Oscillation

amplitude.

Whether or not these highly unpredictable factors are made part of

decision-making is a political choice. But, given the existence of tipping

points in the climate system, it is hard to reconcile the assumption that we

may be able to stabilize the climate or even CO2 concentrations once a

certain level of threshold of temperature or concentration of CO2 is

reached.

But, the authors of the assessment identified a significant gap in

research into the potential of tipping elements in human socio-economic

systems, especially into whether and how a rapid societal transition towards

sustainability could be triggered.106

If the impacts of climate change are non-linear then our response both in

mitigating against and adapting to climate change also has to be non-linear.

|

Box 7. Time is running out

107

In August 2008 nef calculated

that 100 months from 1 August 2008, atmospheric concentrations of

greenhouse gases will begin to exceed a point whereby it is no

longer likely we will be able to avert potentially irreversible

climate change. ‘Likely’ in this context refers to the definition of

risk used by the IPCC to mean that, at that particular level of

greenhouse gas concentration, there is only a 66-90 per cent chance

of global average surface temperatures stabilizing at 2°C above

pre-industrial levels.

In December 2007, the likely CO2e concentration is estimated to be

just under 377ppm, based on a CO2 concentration of 383 ppm. This

seemingly counter-intuitive measure is explained by the proper

inclusion in the CO2e figure of all emissions effecting radiative

forcing - in other words, both those with cooling and warming

effects.

If stabilization occurs at 400 ppm, there is a 10-34 per cent chance

of overshooting a 2°C warming. Beyond this point, the probability of

stabilizing global surface temperatures at less than 2°C decreases.

It would seem that if policy-makers are at all serious about

avoiding dangerous climate change at a threshold of 2°C or less,

emissions need to be reduced significantly. |

What is the risk of overshooting 2°C under various

stabilization scenarios?

We wouldn’t fly in a plane that had more

than a 1 per cent chance of crashing. We should be at least as

careful with the planet. Current climate policies provide us with

far less than a 99 per cent chance of avoiding catastrophic climate

change.108

Paul Sutton, Carbon Equity

When the Kyoto Protocol was established in 1997,

the best scientific understanding implied that a 50 per cent reduction in

emissions below 1990 levels by 2050 would be sufficient to avoid dangerous

climate change.

Thirteen years on, the understanding of what constitutes

safe climate change has improved significantly. Now, there is a growing

consensus that at least an 80 per cent reduction in CO2 emissions below 1990

levels will be required by 2050 globally if we are to have a greater than 60

per cent chance of not exceeding 2°C.109

A recent analysis by the Tyndall

Centre for Climate Change Research demonstrated what this means for the UK.

Incorporating all sectors of the economy, the UK is required to reduce its

carbon dioxide emissions by some 70 per cent by 2030, and around 90 per cent

by 2050.110

Not only is the safe level of temperature rise misleading as described

earlier, a number of assessments exploring the probability of exceeding

various temperature thresholds have been published. These studies

demonstrate that the stabilization of atmospheric concentrations of

greenhouse gases at anything above 400 ppm is too high to avoid a

temperature rise of 2°C.111,112

Research led by Malte Meinhausen, a climate modeler based at the Potsdam

Institute for Climate Impact Research in Germany, has shown that

stabilization of greenhouse gas concentrations (defined as CO2e) at 550 ppm

is accompanied by the risk of overshooting 2°C warming by 68-99 per cent.113

According to the IPCC, this is defined as ‘likely’ to ‘very likely’.114

Meinhausen’s work also suggests that only by stabilising emissions at 400

ppm is it ‘likely’ that the climate will stabilise at 2°C.

In early 2009, however, James Hansen and colleagues at Columbia University

contended that current atmospheric concentrations of CO2 need to be reduced

to 350 ppm.115 Hansen’s analysis for the first time used a climate

sensitivity parameter (temperature change due to an instant doubling of CO2)

that included slower surface albedo feedbacks.

Traditionally, the climate sensitivity parameter only includes

fast-feedbacks (i.e. changes to water vapor, clouds and sea-ice) whilst

keeping slow changing planetary surface conditions constant (i.e., forests

and ice sheets). In addition, long-lived non-CO2 forcings (other gases and

aerosols) are also kept constant over time. It is worth noting, to avoid any

confusion, that Hansen and his team were specifically referring to CO2 only

- not CO2e which also includes non-CO2 forcings.

The paper concluded with the harrowing warning:

‘If humanity wishes to

preserve a planet similar to that on which civilization developed and to

which life on Earth is adapted, paleoclimate evidence and ongoing climate

change suggest that CO2 will need to be reduced from its current 385 ppm to

at most 350 ppm, but likely less than that.’116

Questioning climate policy assumptions

Certain assumptions underlie scenarios for the future stabilization of

greenhouse gas emissions and of their accumulation in the atmosphere.

These

include that historical rates for both energy efficiency improvements and

declining energy intensity will continue and accelerate into the future. In

turn, it is assumed that these will result in an absolute decrease in energy

consumption.

Yet, these assumptions are hugely dependent on

three questions that are not so much unanswered, as barely even asked:

-

Is the stabilization of greenhouse gases through long-term targets the

most effective response to climate change?

-

What are the theoretical and practical limits to energy efficiency of the

economy?

-

Do increases in energy efficiency actually result in decreases in the

demand for energy services?

Under this questioning, current climate change policies appear seriously

flawed, worsening the prognosis for future climate change and our ability to

deal with it.

For example, there are theoretical limits to efficiency governed by the laws

of thermodynamics. There are practical limits to efficiency, relating to

economic, social and political barriers, and the speed at which we can

replace current energy systems.

Observations in the real world suggest that increases in energy efficiency