|

by Joel Hruska

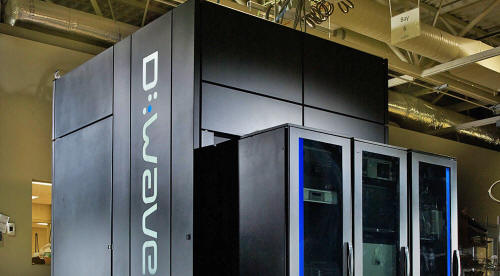

Ever since quantum computer manufacturer D-Wave Systems announced that it had created an actual system, there have been skeptics.

The primary concern was that D-Wave hadn't built a quantum computer as such, but instead constructed a system that happened to simulate a quantum annealer - one specific type of quantum computing that the D-Wave performs - more effectively than any previous architecture.

Earlier reports suggested this was untrue, and Google has now put such fears to rest.

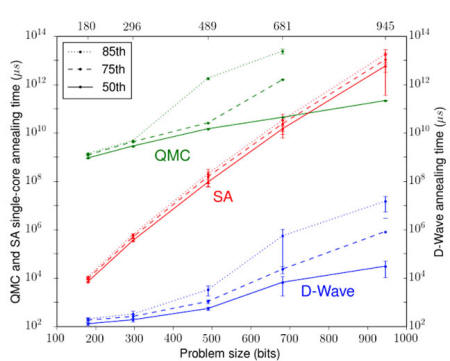

The company has presented findings conclusively demonstrating the D-Wave does perform quantum annealing, and is capable of solving certain types of problems up to 100 million times faster than conventional systems.

Over the past two years, D-Wave and Google have worked together to test the types of solutions that the quantum computer could create and measure its performance against traditional CPU and GPU compute clusters.

In a new blog post, Hartmut Neven, director of engineering at Google, discusses the proof-of-principle problems the company designed and executed to demonstrate,

He writes:

Neven goes on to write that while these results prove, unequivocally, that D-Wave is capable of performance that no modern system can match - optimizations are great and all, but a 100-million speed-up is tough to beat in software - the practical impact of these optimizations is currently limited.

The problem with D-wave's current generation of systems is that they are sparsely connected.

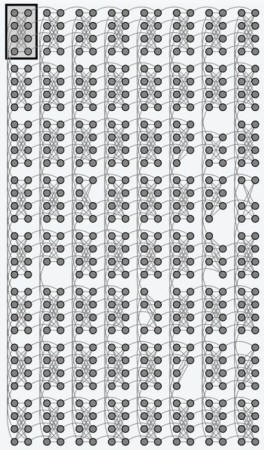

This is illustrated in the diagram below:

D-Wave 2's connectivity tree

Each dot in this diagram represents a qubit; the number of qubits in a system controls the size and complexity of the problems it can perform.

While each group of qubits is cross-connected, there are relatively few connections between the groups of qubits. This limits the kinds of computation that the D-Wave 2 can perform, and simply scaling out to more sparsely-connected qubit clusters isn't an efficient way to solve problems (and can't work in all cases).

Because of this, simulated annealing - the version performed by traditionally designed CPUs and GPUs - is still regarded as the gold standard that quantum annealing needs to beat.

How much of an impact quantum computing will have on traditional markets is still unknown. Current systems rely on liquid nitrogen cooling and incredibly expensive designs.

Costs may drop as new techniques for building more efficient quantum annealers are discovered, but so long as these systems require NO2 to function, they're not going to be widely used. ...and other supercomputer clusters may have specialized needs that are best addressed by quantum computing in the long-term, but silicon and its eventual successors are going to be the mainstay of the general-purpose computing market for decades to come.

At least for now, quantum computers will tackle the problems our current computers literally can't solve - or can't solve before the heat-death of the universe, which is more-or-less the same thing...

|