|

by Makia Freeman December 8, 2017 from Freedom-Articles Website

We have the reached the stage of AI Building AI. Our AI robots/machines are creating child AI robots/machines.

Have we already lost control?

AI Building AI

We are at the point where corporations are designing Artificial Intelligence (AI) machines, robots and programs to make child AI machines, robots and programs - in other words, we have AI building AI.

While some praise this development and point out the benefits (the fact that AI is now smarter than humanity in some areas, and thus can supposedly better design AI than humans), there is a serious consequence to all this: humanity is becoming further removed from the design process - and therefore has less control.

We have now reached a watershed moment with AI building AI better than humans can.

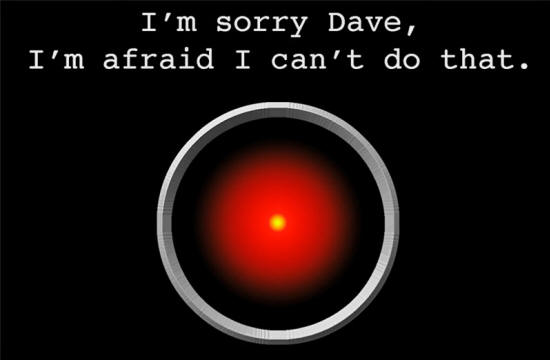

If AI builds a child AI which outperforms, outsmarts and overpowers humanity, what happens if we want to modify it or shut it down - but can't?

After all, we didn't design it, so how can we be 100% sure there won't be unintended consequences? How can we be sure we can 100% directly control it?

AI building AI: AutoML built NASNet. Image credit: Google Research

AI Building AI - Child AI Outperforms All Other Computer Systems in Task

Google Brain researchers announced in May 2017 that they had created AutoML, an AI which can build children AIs.

The "ML" in AutoML stands for Machine Learning. As this article Google's AI Built its Own AI that Outperforms Any Made by Humans reveals, AutoML created a child AI called NASNet which outperformed all other computer systems in its task of object recognition:

With AutoML, Google is building algorithms that analyze the development of other algorithms, to learn which methods are successful and which are not.

This Machine Learning, a significant trend in AI research, is like "learning to learn" or "meta-learning."

We are entering a future where computers will invent algorithms to solve problems faster than we can, and humanity will be further and further removed from the whole process.

AI building AI: how will humanity control children AI when humans didn't create them?

AI Building AI - Programmed Parameters vs. Autonomous and Adaptable Systems

The issue is stake is how much "freedom" we give AI.

By that I mean this: those pushing the technological agenda boast that AI is qualitatively different to any machines of the past, because AI is autonomous and adaptable, meaning it can "think" for itself, learn from its mistakes and alter its behavior accordingly.

This makes AI more formidable and at the same time far more dangerous, because then we lose the ability to predict how it will act. It begins to write its own algorithms in ways we don't comprehend based on its supposed "self-corrective" ability, and pretty soon we have no way to know what it will do.

Now, what if such an autonomous and adaptable AI is given the leeway to create a child AI which has the same parameters? Humanity is then one step further removed from the creation.

Yes, we can program the first AI to only design children AIs within certain parameters, but can we ultimately control that process and ensure the biases are not handed down, given that we are programming AI in the first place to be more human-like and learn from its mistakes?

In his article The US and the Global Artificial Intelligence Arms Race, Ulson Gunnar writes:

AI Building AI: Can We Ever Be 100% Sure We Are Protected Against AI?

Power and strength without wisdom and kindness is a dangerous thing, and that's exactly what we are creating with AI.

We can't ever teach it to be wise or kind, since those qualities spring from having consciousness, emotion and empathy. Meanwhile, the best we can do is have very tight ethical parameters, however there are no guarantees here.

The average person has no way of knowing what code was created to limit AI's behavior. Even if all the AI programmers in the world wanted to ensure adequate ethical limitations, what if someone, somewhere, makes a mistake?

What if AutoML creates systems so quickly that society can't keep up in terms of understanding and regulating them?

NASNet could easily be employed in automated surveillance systems due to its excellent object recognition. Do you think the NWO controllers would hesitate even for a moment to deploy AI against the public in order to protect their power and destroy their opposition?

The Google's AI Built Its Own AI that Outperforms any Made by Humans article tries to reassure us with its conclusion:

However, I am anything but reassured. We can set up all the ethics committees we want. The fact remains that it is theoretically impossible to ever protect ourselves 100% from AI.

The article Containing a Superintelligent AI is Theoretically Impossible explains:

Meanwhile, it appears there are too many lures and promises of profit, convenience and control for humanity to slow down.

AI is starting to take everything over. Facebook just deployed a new AI which scans users' posts for "troubling" or "suicidal" comments and then reports them to the police!

This article states:

Final Thoughts

With AI building AI, we are taking another key step forward into a future where we are allowing power to flow out of our hands. This is another watershed moment in the evolution of AI.

What is going to happen?

Sources

|