|

from

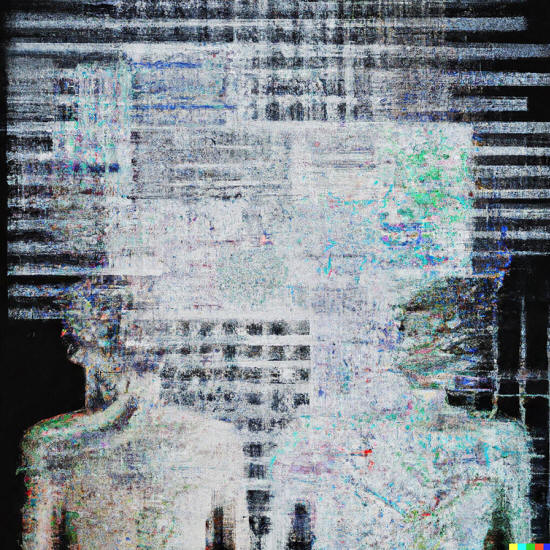

NYTimes Website when given the prompt "A distributed linguistic superbrain that takes the form of an A.I. chatbot." Credit...Kevin Roose, via DALL-E

Social media's newest star is a robot:

Since its debut last week, many people have shared what the bot can do.

New York magazine journalists told it to write what turned out to be a "pretty decent" story. Other users got it to write a solid academic essay on theories of nationalism, a history of the tragic but fictitious Ohio-Indiana War and some jokes.

It told me a story about an artificial intelligence program called Assistant that was originally set up to answer questions but soon led a New World Order that guided humanity to,

What is remarkable about these examples is their quality:

OpenAI, the company behind ChatGPT, is reportedly working on a better model that could be released next year.

In today's newsletter, I'll explain the potential benefits of artificial intelligence but also why some experts worry it could be dangerous.

Advanced efficiency

The upside of artificial intelligence is that it might be able to accomplish tasks faster and more efficiently than any person can.

The possibilities are up to the imagination:

The technology is not there yet.

But it has advanced in recent years through what is called machine learning, in which bots comb through data to learn how to perform tasks.

In ChatGPT's case, it read a lot. And, with some guidance from its creators, it learned how to write coherently - or, at least, statistically predict what good writing should look like.

There are already clear benefits to this nascent technology.

Another example comes from a different program, Consensus.

This bot combs through up to millions of scientific papers to find the most relevant for a given search and share their major findings. A task that would take a journalist like me days or weeks is done in a couple minutes.

These are early days.

But it is very quickly evolving.

Even some skeptics believe that general-use A.I. could reach human levels of intelligence within decades.

Unknown risks

Despite the potential benefits, experts are worried about what could go wrong with A.I.

If A.I. reaches the heights that some researchers hope, it will be able to do almost anything people can, but better.

Some experts point to existential risks.

These are people saying that their life's work could destroy humanity.

That might sound like science fiction.

But the risk is real, experts caution.

Take one hypothetical example, from Kelsey Piper at Vox:

If that sounds implausible, consider that the current bots already behave in ways that their creators don't intend.

ChatGPT users have come up with workarounds to make it say racist and sexist things, despite OpenAI's efforts to prevent such responses.

The problem, as A.I. researchers acknowledge, is that no one fully understands how this technology works, making it difficult to control for all possible behaviors and risks.

Yet it is already available for public use.

|