|

on display at NeoSensory.

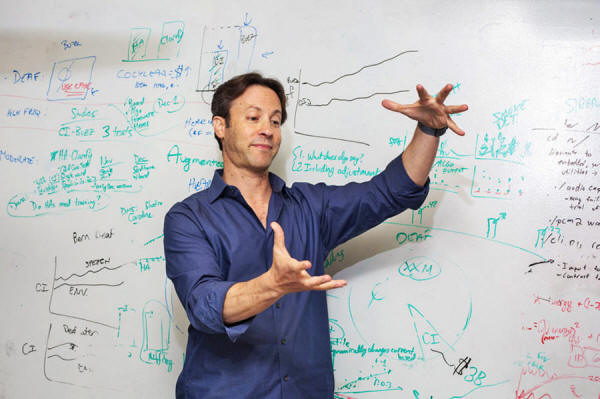

aims to give deaf people a new way to hear, and upgrade everyone else's senses too.

Housed inside a wristband slightly bigger than a Fitbit, the Buzz has a microphone that picks up sound and a computer chip that breaks it into eight frequency ranges.

Each frequency range links to a built-in micromotor. When sound from a specific range activates the corresponding motor, it buzzes slightly. It's more than a tingle but less than a bee sting.

For example, when

Eagleman says the word "touch," his baritone drone of the "uh" sound

in the middle of the word buzzes the left side of my wrist, and then

the higher-pitch "ch" that ends the word buzzes the right.

Each VEST - short for Versatile Extra-Sensory Transducer - looks like a thin wetsuit vest with shiny black circles embedded in the fabric at regular intervals.

These work like the Buzz

wristband to break sound into frequencies that are replayed on the

skin. But instead of having eight motors, there are 32 of them,

evenly spaced across the chest and back.

Eagleman has versions that work with images, the major difference being that the microphone capturing sound is replaced by a camera capturing video.

He's also built versions that can detect information that typically eludes human senses.

There are varieties that can see in infrared and ultraviolet, two parts of the spectrum that are invisible to the human eye. Others can take live Twitter feeds or real-time stock market data and translate them into haptic sensations.

Researching synesthesia convinced Eagleman that anyone can develop new ways of perceiving the world.

Photo by Eric Ruby

For many years, he was a

professor at Baylor University, where he ran the only

time-perception laboratory in the world. These days, he's an adjunct

professor at Stanford and an entrepreneur, with his new startup,

NeoSensory, located just a few blocks from campus.

People with this condition smell colors in addition to seeing them, for example.

Scientists use the German word umwelt - pronounced "oom-velt" - to describe the world as perceived by the senses.

And not every animal's umwelt is the same.

An early prototype for the VEST, sewn at NeoSensory. Photo by Eric Ruby

But Eagleman wasn't so sure.

Synesthesia made him wonder if our senses were more pliable than assumed. Plus, there had been 50 years of research showing the brain is capable of sensory substitution - taking in information via one sense but experiencing it with another.

"If you feed the brain a pattern, eventually it'll figure out how to decode the information."

Although the interface is different, this is roughly similar to what cochlear implants do. They take an auditory signal, digitize it, and feed it into the brain.

When cochlear implants were invented, not everyone was certain they would work.

We now know that cochlear implants work just fine.

So do retinal implants, even though they don't give signals to the brain in the same way as biological retinas do.

Each of these Buzz wristbands picks up different kinds of signals. Photo by Eric Ruby

It took eons to shape the bat's echolocation system or the octopus's ability to taste through its tentacles.

But Eagleman suspected all that time wasn't required.

As Eagleman explains all of this, Buzz is turning his words into touch and feeding that pattern through my skin and into my brain.

It's also gathering noise from the room. When one of NeoSensory's engineers slams a door, I can feel that on my wrist. Not that I'm consciously able to tell these signals apart.

The device's motors

operate every 1/16 of a second, which is faster than I can

consciously process. Instead, Eagleman says, most of the learning -

meaning my brain's ability to decode these signals - takes places

at a subconscious level.

Some researchers claim that the tactile sensitivity of the skin isn't fine enough to tell signals apart. Others argue that this kind of subconscious learning isn't even possible.

But Eagleman says that in a not-yet-published experiment, deaf people learned how to "hear" with a Buzz.

Though, as Eagleman also explains,

On average, it took four

training sessions, each roughly two hours long.

Eagleman says 80 percent of them could do this almost right away. The team also showed deaf people a video of someone talking and played one of two different soundtracks. One matched the video and one didn't.

Eagleman says 95 percent

of the subjects could quickly identify the accurate soundtrack by

going off the signals from Buzz.

with the right kind of data compression in place, there are no real limits

to what the

device can detect.

Even if the full ability to decode speech is not possible - Eagleman doesn't know, as he has yet to run long-term tests on his devices - Buzz's ability to provide "low-resolution hearing" will be extremely valuable, he says.

Compare that to another technological option for people with severe hearing loss, a cochlear implant. It requires an invasive surgery and six weeks of recovery time, and it costs tens of thousands of dollars.

It also can take about a year for people to actually learn to hear properly with cochlear implants.

The VEST can light up as well as vibrate in response to auditory stimuli. Photo by Eric Ruby

The wristband might cost

around $600 and VEST around $1,000, but Eagleman says the prices

aren't certain yet.

Can we use technology to expand our umwelts?

They were both wearing a version of the wristband that detects parts of the visual spectrum that humans can't see, including the infrared and ultraviolet frequencies.

As they strolled, neither seeing anything unusual, they both started getting really strong signals on their wrists.

They were being watched - but by what? Their wristbands allowed them to answer that question. They could track the signals back to their point of origin - an infrared camera attached to someone's home.

This may have been one of

the very first times a human accidentally detected an infrared

signal without the help of night vision goggles. In fact, it may be

one of the first times humans accidentally expanded their umwelts.

As far as Eagleman can

tell, with the right kind of data compression in place, there are no

real limits to what the device can detect.

has the nature of reality been so pliable.

To explore this potential further, Eagleman has also teamed up with Philip Rosedale, the creator of the virtual world 'Second Life'.

Rosedale's next iteration, known as 'High Fidelity', is designed for virtual reality.

Eagleman has a long-sleeve version of the VEST - known as the "exo-skin" - that's designed to work with it.

These devices are being released with an open API - meaning anyone can run their own experiments.

For example, my wife and I run a hospice care dog sanctuary and share our house with around 25 animals at a time. While the house is much quieter than many would suspect, there are definitely times when all our dogs start barking at once.

What the hell are they

talking about?

Eagleman suspects that if

I wore one of his devices around my pack of dogs for a while, sooner

or later, I might be able to detect things that set them off.

Will we even know what it's like to be a bat? Maybe, maybe not.

But never before has the

nature of reality been so pliable and our ability to experiment with

alternate umwelts been so powerful.

|