|

by Brandon Turbeville

February 9, 2012

from

ActivistPost Website

|

Brandon Turbeville

is an author out of Mullins, South Carolina.

He has a Bachelor's

Degree from Francis Marion University and is the author

of three books, Codex Alimentarius - The End of Health

Freedom, 7 Real Conspiracies, and Five Sense Solutions.

Turbeville has

published over one hundred articles dealing with a wide

variety of subjects including health, economics,

government corruption, and civil liberties.

Brandon Turbeville

is available for podcast, radio, and TV interviews.

|

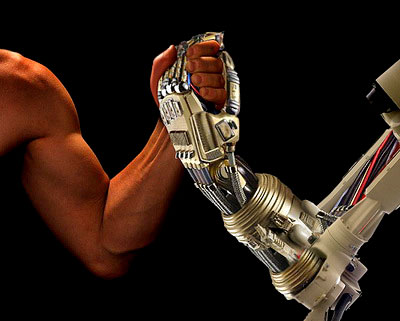

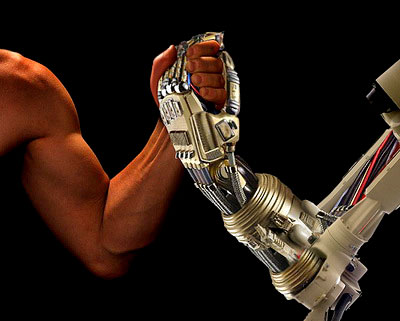

In a testament to just how fast the coming cyberization of mankind

has progressed, a new report published by the Daily Mail entitled, “Hitler

would have loved The Singularity - Mind-blowing benefits of merging

human brains and computers,” reaffirms most of what I

have been writing about for the better part of a year. Namely, that

the merging of man and machine is much closer than the average

person is willing to believe.

In the news report, Ian Morris, Professor of Classics and

History at Stanford University and author of Why The West Rules -

For Now, briefly overviews years of mainstream history involving

the development and implementation of Singularity-related

technologies.

Before going much further, however, it is important for the reader

to understand just what is meant when the term “Singularity” is

used.

According to

Lev Grossman

writing in Time magazine (21 Feb 2011), “Singularity” is,

“The moment when technological

change becomes so rapid and profound, it represents a rupture in

the fabric of human history.”

Grossman also provides a

brief history of the word’s usage

in futurism:

The singularity isn’t a wholly new

idea, just newish. In 1965 the British mathematician I.J. Good

described something he called an 'intelligence explosion':

“Let an ultraintelligent machine

be defined as a machine that can far surpass all the

intellectual activities of any man however clever.

Since the

design of machines is one of these intelligent activities,

an ultraintelligent machine could design even better

machines; there would then unquestionably be an

'intelligence explosion,' and the intelligence of man would

be left far behind. Thus the first ultraintelligent machine

is the last invention that man need ever make.”

The word 'singularity' is borrowed

from astrophysics: it refers to a point in space-time - for

example, inside a black hole - at which the rules of ordinary

physics do not apply.

In the 1980s the science-fiction

novelist Vernor Vinge attached it to Good’s

intelligence-explosion scenario. At a NASA symposium in 1993,

Vinge announced that,

"within 30 years, we will have

the technological means to create superhuman intelligence.

Shortly after, the human era will be ended."

Simply put, Singularity is the moment

when man and machine merge to create a new type of human - a

singular entity that contains property of both machines and humans.

If the concept of Singularity is new to you, I suggest reading my

article “The Singularity Movement, Immortality, and Removing the

Ghost in the Machine.” In this article, I discuss the premise behind

the movement, and some of the implications it holds for basic human

freedom, dignity, and even our own existence.

Unfortunately, Singularity is not a fringe movement as some might at

first believe; it has a great number of followers, many of whom are

in powerful positions. For instance, the

Singularity University is a

three-year-old institution that offers inter-disciplinary courses

for both executives and graduate students.

It is hosted by NASA, a notorious front

for

secretive projects conducted by the government and the

military-industrial complex. Not only that, but

Google, which is yet

another corporate front for intelligence agencies, was a founding

sponsor of the University as well.

It is this context in which Ian Morris writes his own article about

the coming merger of human brains and computers.

Morris prefaces his commentary on Singularity by pointing out some

mainstream (even if not well-known) facts regarding the development

of technology that he, and many others who are informed on the

subject, believes will allow for actually sending human thoughts

over the Internet. All of this, of course, will take place after

human brains are chipped, or otherwise linked to computers.

Morris writes:

Ten years ago, the US National

Science Foundation predicted ‘network-enhanced telepathy’ -

sending thoughts over the internet - would be practical by the

2020s.

And thanks to neuroscientists at the University of California,

we seem to be on schedule.

Last September, they asked volunteers to watch Hollywood film

trailers and then reconstructed the clips by scanning their

subjects’ brain activity.

He continues by saying:

Last week, the scientists boldly

went further still. They charted the electrical activity in the

brains of volunteers who were listening to human speech and then

they fed the results into computers which translated the signals

back into language.

The technique remains crude, and has so far made out only five

distinct words, but humanity has crossed a threshold.

The threshold that Morris refers to is

the moment where the merging of man and machine are announced to the

general public, not necessarily the moment when it becomes possible.

Indeed, we know that any research or

development announced to the general public is, in reality, much

further behind the true capabilities of the technology. For

instance, the ability to

control brain function via computers or for

brains to control computers by thought has been

available for many

years. Only the crude forms of this technology have been introduced

for mass consumption.

Even so, the introduction came a great

many years after the actual development.

Yet, after pointing out some of the positive aspects that this

technology might present to humanity, such as providing speech to

those impaired by neurodegenerative diseases, or movement to those

suffering from paralysis, Morris points out some other rather

disturbing directions this rapidly developing technology might take.

Disturbing, that is, if one is not part of

the Singularity cult.

Nevertheless, Morris moves through some innocuous and unquestionably

beneficial developments such as eyeglasses and ear trumpets, which

show the lengths to which technology has progressed and the

relatively short time scale it has taken to do so.

These devices have either become a

normal part of life, or have given way to other more advanced

technologies. These more advanced devices such as hearing aids,

dialysis machines, and pacemakers have all become normal and

accepted machine additions as well.

However, as Morris writes:

By the second decade of the 21st

Century, we have become used to organs grown in laboratories,

genetic surgery and designer babies.

In 2002, medical researchers used enzymes and DNA to build the

first molecular computers, and in 2004 improved versions were

being injected into people’s veins to fight cancer

By 2020 we may be able to put even cleverer nano-computers into

our brains to speed up synaptic links, give ourselves perfect

memory and perhaps cure dementia.

If nano-computers implanted in our

brains would indeed increase these functions of the human brain,

making then possible the furthering of other related technological

and other biotechnological advancements, then it is realistic to

believe (as many in the Singularity movement do) that the human

being as we know it will cease to exist.

The old man will be replaced by the new.

That which

was made imperfect would be made perfect. This is exactly

the future which Singularity promoters like Juan Enriquez

have been

foreseeing.

Enriquez’s long resume affirms the fact

that those in prominent positions hold fast to what is essentially a

modern version of eugenics based on more than just mere ethnicity.

Enriquez himself states that humanity, by virtue of Singularity,

will develop into an entirely different species.

He writes:

The new human species is one that

begins to engineer the evolution of viruses, plants, animals,

and itself.

As we do that, Darwin’s rules get

bent, and sometimes even broken. By taking direct and deliberate

control over our evolution, we are living in a world where we

are modifying stuff according to our desires...

Eventually, we get to the point

where evolution is guided by what we’re engineering. That’s a

big deal. Today’s plastic surgery is going to seem tame compared

to what’s coming.

Enriquez also admits that, as a result

of this emerging technology, a “new ethics” must be developed to go

along with the opportunities for eugenics that now present

themselves.

He says:

The issue of [genetic variation] is

a really uncomfortable question, one that for good reason, we

have been avoiding since the 1930s and '40s. A lot of the

research behind the eugenics movement came out of elite

universities in the U.S. It was disastrously misapplied.

But you do have to ask, if there are

fundamental differences in species like dogs and horses and

birds, is it true that there are no significant differences in

humans? We are going to have an answer to that question very

quickly.

If we do, we need to think through

an ethical, moral framework to think about questions that go way

beyond science.

Of course, the open promoters of

Singularity such as Juan Enriquez and Ray Kurzweil are

not the root of the movement.

As Morris points out, the funding of

projects related to the merging of the human brain with that of the

computer has been funded mostly by

DARPA (Defense Advanced Research

Projects Agency).

After all, as Morris points out, it was DARPA that produced the

Internet (called

ARPANET in the 1970s) and it was DARPA’s

Brain

Interface Project that was the first voyage in molecular computing.

As I mentioned earlier, however, one

should be aware that even these projects that been announced and

revealed to the general public are actually far behind in the true

time scale of development. DARPA’s research and discoveries are

years or decades ahead of anything they introduce, even

retroactively, to the scientific community at large, much less the

general public.

This is why programs such as,

Silent

Talk, are exploring mind reading technology by virtue of reading the

electrical signals inside the brains of soldiers, then broadcasting

them for two-way communication with soldiers over the Internet.

As Morris writes:

With these implants, entire armies

will be able to talk without radios. Orders will leap instantly

into soldiers’ heads and commanders’ wishes will become the

wishes of their men.

Add this to the fact that “mind reading”

technology is already being rolled out in Western airports, and one

can easily see an agenda at work.

A very crude version of the

neuron-scanning technology discussed by Morris, these “Emotion

Detectors” use video cameras and facial cues, as well as thermal

imaging technology, to detect emotions that are unacceptable to

“authorities.”

However, the technology Morris writes

about is much more advanced than emotion scanners. Even the

definition of “mind reading” in terms of the new interface programs

tends to be more dynamic.

Consider how Morris describes Ray Kurzweil’s prediction of where

mind reading programs will go in the future.

He writes,

Since the Sixties, computer chips

have been doubling their speed and halving their cost every 18

months or so.

If the trend continues, the inventor and predictor Ray Kurzweil

has pointed out that by 2029 we will have computers powerful

enough to run programs reproducing the 10,000 trillion

electrical signals that flash around your skull every second.

They will also have enough memory to store the ten trillion

recollections that make you who you are.

And they will also be powerful enough to scan, neuron by neuron,

every contour and wrinkle of your brain.

What this means is that if the trends of the past 50 years

continue, in 17 years’ time we will be able to upload an

electronic replica of your mind on to a machine.

There will be two of you - one a flesh-and-blood animal, the

other inside a computer’s circuits.

And if the trends hold fast beyond that, Kurzweil adds, by 2045

we will have a computer that is powerful enough to host every

one of the eight billion minds on earth.

Carbon and silicon-based intelligence will merge to form a

single global consciousness.

The world being described here is not

much different than the one presented in movies like The Matrix or

Ghost in the Shell ; a world where humans have been physically

altered in order to be linked with the Internet.

In both movies, there is a version of

the “single global consciousness” where cyberized humans are fully

merged into the virtual world. Yet, although such technology has

been portrayed as science fiction for years, the fact is that the

Singularity is now a very real possibility.

As US Col. Thomas Adams stated,

technology,

“is rapidly taking us to a place

where we may not want to go, but probably are unable to avoid.”

He should know.

Western militaries have

been

preparing for the Singularity for some time. In this context,

where war becomes literally ingrained, the dystopic vision of dark

science fiction becomes promoted as a real-world solution.

Although Col. Adams is right to suggest that we are heading in a

direction that we do not wish to go, he is wrong to suggest that we

are unable to avoid it. As we stand currently, the ability to avoid

losing our own humanity in a fog of computer circuits and

switchboards is still well within our grasp.

Admittedly, because of the incremental approach taken by movements

such as Singularity, there is added difficulty in resistance.

However, it is time the people of the world decide exactly what

their line in the sand will be, and it is time for them to draw that

line.

While our own humanity may be at stake,

we can save it by uttering one solitary word:

“No.”

|